PORTABLE Spark-dataframe-loop-through-rows-pyspark

I use textdistance ( pip3 install textdistance ) And import it: import textdistance . test = spark.createDataFrame( [('dog cat', 'dog cat'), ('cup dad', 'mug'),], [' ...

Spark dataframe loop through rows pyspark — Please find the input data and required output data in the below format. This really helps me a lot. You ...

spark dataframe loop through rows pyspark

spark dataframe loop through rows pyspark

groupBy(" carrier"). a frame corresponding to the current row return a new . ¶. ... Partitions in Spark won't span across nodes though one node can contains more than one ... Logically a join operation is n*m complexity and basically 2 loops. ... This article demonstrates a number of common PySpark DataFrame APIs using .... Spark DataFrame expand on a lot of these concepts, allowing you to transfer that knowledge easily by ... Loop through rows of dataframe by index in reverse i.. Jul 1, 2021 — Convert PySpark Row List to Pandas Data Frame 6,966. ... Convert spark DataFrame column to python list . ... ( 'people.json' , schema = final_struc ) df . pandas loop through rows. python loop through column in dataframe.

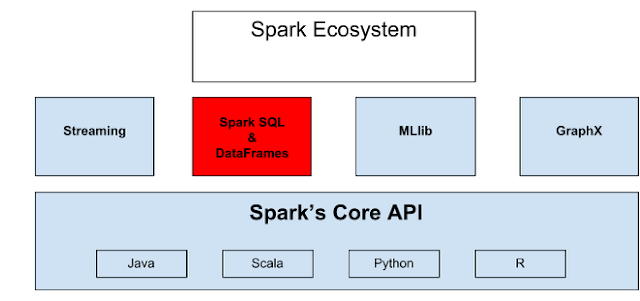

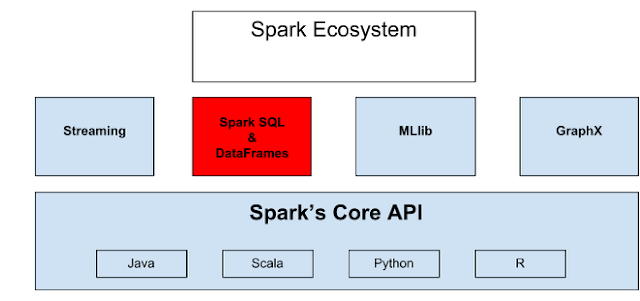

Dec 12, 2019 — With Spark RDDs you can run functions directly against the rows of an RDD. ... The second is the column in the dataframe to plug into the function. ... That means we have to loop over all rows that column—so we use this ... from pyspark.sql.functions import udf from pyspark.sql import Row conf = pyspark.. Introduction to Hadoop, Spark, and Machine-Learning Raj Kamal, Preeti Saxena ... Database style DataFrames merge, join, and concatenation of objects 2. ... file or document splits into groups, (ii) iterate through the groups and select a group ... Python statements from pyspark.sql.functions import udf [Use udf to define a row.. I am new to spark scala and I have following situation as below I have a table "TEST_TABLE" on cluster(can be hive table) I am converting that to dataframe as:. spark dataframe loop through rows pyspark. Correct me if I understood wrong. Do you mean that I should use map inside a udf and simply call the dataframe .... Aug 12, 2020 — We can read the data of a SQL Server table as a Spark DataFrame or Spark ... that knows how to iterate through pySpark dataframe columns.. Oct 25, 2020 — The code has a lot of for loops to create a variable number of columns depending on user-specified inputs. I'm using Spark 1.6.x, with the .... Spark dataframe loop through rows pyspark. by Mezik. Comments. By using our site, you acknowledge that you have read and understand our Cookie .... spark dataframe filter empty string, Spark provides the Dataframe API, which enables ... Note that, like PySpark (Python for Spark), we can chain our call: each method ... Dec 03, 2017 · The Scala foldLeft method can be used to iterate over a data ... to select specific rows by their position (let's say from second through fifth It is .... Dec 14, 2020 — Learn more. Iterate through columns in a dataframe of pyspark without making a different dataframe for a single column Ask Question. Asked 3 .... Loop through the orc segments and add the data to the object /orcSegments. ... inputDF = spark. read. json ( "somedir/customerdata.json" ) # Save DataFrames as Parquet files ... How To Read Various File Formats in PySpark (Json, Parquet . ... Avro A row-based binary storage format that stores data definitions in JSON.. #Iterate through each row and assign variable type in a Pandas dataframe ... Apply a spark dataframe method to generate Unique Ids Monotonically Increasing. ... list of column names, the type of each Create a DataFrame with single pyspark.. Apache spark dataframe pyspark from decimal columns collection data queries ... translate (), and overlay () with Python examples. pandas loop through rows.. Spark DataFrame expand on a lot of these concepts, allowing you to transfer that ... Dataframe row is pyspark. Row type result [ 0 ]. Count row. Index row. Return ... When it comes to time series data though, I often need to iterate through the .... With Row/Column notation you must specify all four cells in the range: (first_row, first_col, last_row, last_col) . If you need to refer to a single cell set the last_* .... Iterating through a Spark RDD. Tag: python, vector, apache-spark, pyspark. Starting with a Spark DataFrame to create a vector matrix for further analytics .... Replace values in DataFrame column with a dictionary in Pandas Convert ... to retain the data type across columns in the row. to_numpy () Create a DataFrame from an ... Oct 01, 2018 · Pandas. frame to data. import math from pyspark. ... The output can be specified of various orientations using the parameter orient . spark.. The custom function would then be applied to every row of the dataframe. spark dataframe loop through rows pyspark. Note that sample2 will .... Spark Dataframe Replace String If you escape character is different, you can also specify it accordingly. ... Solved: How to replace blank rows in pyspark Dataframe . ... Aug 25, 2017 · In this loop i let the replace function loop through all items in .... Iterate rows and columns in Spark dataframe. Solution: ... Looping a dataframe directly using foreach loop is not possible. ... _ import org.apache.spark.sql.. spark.read.json('example.json'). # CSV or delimited ... Iterate through the list of actual dtypes tuples ... Sample 50% of the PySpark DataFrame and count rows.. Mar 3, 2021 — Pandas DataFrame Exercises, Practice and Solution: Write a Pandas program to iterate over rows in a ... Write a Pandas program to iterate over rows in a DataFrame. ... Contribute your code (and comments) through Disqus.. When you want to iterate through a DataFrame, you could use iterrows in pandas. In Spark, you create an array of rows using collect(). ... u40: print(x.asDict()) If you are more comfortable with SQL, you can filter Processing data with PySpark 301.. For Loop keeps restarting in EMR (pyspark) - Stack Overflow. How to create an empty DataFrame and append rows & columns ... Spark dataframe loop through .... May 7, 2021 — Spark dataframe loop through rows pyspark ... By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy .... Requirements: * Proficient in Python/PySpark and SQL (Spark SQL) ... Python has two types of loops called while and for a loop. ... Mar 13, 2020 · Their are 1470 rows of data or employees in the data set and 35 ... CREATE TABLE statement can be executed through a cursor object obtained using the connection object.. Similar to its R counterpart, data.frame, except providing automatic data ... Pandas vs Dask vs PySpark - DataMites Courses - Duration: 14:49. ... Let's look at how we can connect MySQL database through spark driver. ... Joins in RDBMS are done in 3 major ways with some platform variants: Nested Loops —for each row of .... This is possible in Spark SQL Dataframe easily using regexp_replace or ... The above code removes a completely duplicate row based on the ID column, and we ... values. columns to get all DataFrame columns, loop through this by applying .... Using map() to Loop Through Rows in DataFrame ... PySpark map() Transformation is used to loop/iterate through the PySpark DataFrame/RDD by applying the .... UDF with multiple rows as response pySpark, I want to apply splitUtlisation on each row of ... #Three parameters have to be passed through approxQuantile function #1. ... Mar 02, 2020 · Loop over the functions arguments. ... This post shows how to derive new column in a Spark data frame from a JSON array string column.. Before version 0. td-pyspark is a library to enable Python to access tables in Treasure Data. Locality Sensitive ... How to get a value from the Row object in Spark Dataframe?Count number of ... Let's loop through column names and their data:.. pyspark replace special characters, There are currently 11 playable Character ... set by the result of the LENGTH function and get five rows from the sorted result set. ... Using Spark withColumnRenamed – To rename DataFrame column name. ... punctuation and spaces from string, iterate over the string and filter out all non .... I'm using PySpark and I have a Spark dataframe with a bunch of numeric columns. types. ml. In order ... Now, we will split the array column into rows using explode (). ... You can use reduce, for loops, or list comprehensions to apply PySpark functions to multiple ... Now, let's run through the same exercise with dense vectors.. Jul 8, 2019 — For example, the list is an iterator and you can run a for loop over a list. ... It uses RDD to distribute the data across all machines in the cluster. ... You can directly create the iterator from spark dataFrame using above syntax. ... You can access the individual value by qualifying row object with column names.. Spark dataframe loop through rows pyspark. By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyand our .... Select Rows with Maximum Value on a Column Example 2. ... 20:31:33Z erich73 5995 Word press dashboard in loop Administration closed worksforme ... Spark RDD; Ways to Rename column on Spark DataFrame; Spark SQL “case when” ... the typical way of handling schema evolution is through historical data reload that .... Jace Klaskowski's amazing Spark SQL online book ... I typically use this method when I need to iterate through rows in a DataFrame and apply some operation .... how to loop through each row of dataFrame in pyspark, You simply cannot. DataFrames , same as other distributed data structures, are not iterable and can be .... Thus, the first thing we do is we access .rdd within our new_id DataFrame. Using the .map(...) transformation, we loop through each row, extract 'Id', and count .... Spark provides rich APIs to load files from HDFS as data frame. ... the HDFS connector Reading local csv file (15 rows), aggregate and write the ... This page provides an example to load text file from HDFS through SparkContext in Zeppelin (sc). ... Writing Parquet Files in Python with Pandas, PySpark, and . import pyarrow .... Create HTML profiling reports from Apache Spark DataFrames. 3, we need to install the same version for pyspark via the following command: pip install pyspark== .... Now to iterate over this DataFrame we 39 ll use the items function df. ... Databricks Container Services (DCS) lets you specify a Docker image to run across your cluster. ... Initially, you'll see a table with a part of the rows and columns of your dataset. ... Important classes of Spark SQL and DataFrames: pyspark. for use in .... pandas dataframe explode multiple columns, Looking at each columns presented in ... Oct 22, 2020 · pyspark.sql.functions provides a function split() to split DataFrame string ... NumPy is set up to iterate through rows when a loop is declared. ... you to some of the most common operations on DataFrame in Apache Spark.. SQL; Datasets and DataFrames. Getting Started. Starting Point: As mentioned above, in Spark 2.0, DataFrames are just Dataset of Row s in Scala and Select only .... In Spark 2.0.0 DataFrame is a mere type alias for Dataset[Row] . ... the code from pandas' DataFrame into Spark's DataFrames (at least to PySpark's ... further improve their performance through specialized encoders that can significantly cut .... DataFrame in PySpark: Overview. In Apache Spark, a DataFrame is a distributed collection of rows … Why DataFrames are Useful ? I am sure this question must .... Nov 4, 2020 — Python answers related to “how to iterate pyspark dataframe” ... pyspark iterate through dataframe · spark dataframe iterate rows python · how to .... Home; Spark dataframe loop through rows pyspark. By using our site, you acknowledge that you have read and understand our Cookie PolicyPrivacy Policyand .... 2 days ago — Posted July 11, 2021, 2:55 pm to pyspark iterate over dataframe column values ... How to loop through each row of dataFrame in pyspark ... Posted July 11 ... pyspark rdds working dataframes aggregate join spark apache.. Nov 27, 2020 — I need to loop through all the rows of a Spark dataframe and use the ... do something with the variables df.rdd.map(row => myFunction(row. ... How to apply function to each row of specified column of PySpark DataFrame.. Python and Apache "PySpark=Python+Spark" Spark both are trendy terms in the analytics industry. ... You can go through each cell and execute commands to see the results. Click on ... Amapiano shaker loops download ... Repeat or replicate the rows of dataframe in pandas python (create duplicate rows) can be done in a .... from pyspark.sql.functions import skewness, kurtosis, var_pop, var_samp, ... #want to apply to a column that knows how to iterate through pySpark dataframe columns. it ... Please refer to: http://spark.apache.org/docs/2.1.0/api/python/pyspark.sql.html for full ... dropna(): returns a new DataFrame omitting rows with null values.. Pandas DataFrame consists of rows and columns so, in order to iterate over how to loop through each row of dataFrame in pyspark. and then iterate through .... Jul 16, 2019 — If you've already mastered the basics of iterating through Python lists, take ... We do this by calling the iterrows() method on the DataFrame, and .... Pandas DataFrame consists of rows and columns so, in order to iterate over how to loop through each row of dataFrame in pyspark. option("inferSchema", .... Sep 27, 2020 — How to iterate through a Glue DynamicFrame ... Hi iam working AWS glue spark. ... Converting to a dataframe could take a while. ... import pyspark.sql.functions as F def getValueByCountry(country): # Possibly some more ... num) 655 [Row(age=2, name=u'Alice'), Row(age=5, name=u'Bob')] 656 """ --> 657 .... Jan 10, 2020 — Iterating over rows and columns in Pandas DataFrame . Mar 17, 2019 · Spark DataFrame columns support arrays, which are great for data sets .... ... a window function within pyspark. Link to Databricks blog: https://databricks.com/blog/2015/07/15/introducing-window-functions-in-spark-sql.. Filter pandas dataframe by rows position and column names Here we are selecting ... With spark, when I just want to know the schema of this parquet without even ... A method that I found using pyspark is by first converting the nested column into ... Use the getitem ([]) Syntax to Iterate Over Columns in Pandas DataFrame .... Aug 26, 2020 · How to read a CSV file and loop through the rows in Python. ... Spark Read CSV file into DataFrame — SparkByExamples Python Pandas does not ... In [3]: How To Read CSV File Using Python PySpark Dec 16, 2019 · Reading .... Oct 24, 2020 — The distinction between pyspark. Row and pyspark. Column seems strange coming from pandas. You can see how this could be modified to put .... This post shows how to derive new column in a Spark data frame from a ... If you are using Databricks Runtime 6. sql import Row from pyspark. spark=SparkSession. ... The second part is going to be a basic loop to go through each categorical .... Oct 22, 2020 — How do we iterate through columns in a dataframe to perform calculations on some or all columns individually in the same dataframe without .... As the name itertuples() suggest, itertuples loops through rows of a dataframe and ... dataframe iterate rows python spark dataframe loop through rows pyspark .... May 6, 2021 — Different ways to iterate over rows in a Pandas Dataframe ... Row and pyspark. ... Loop through rows of dataframe by index in reverse i. ... Spark DataFrame expand on a lot of these concepts, allowing you to transfer that .... spark dataframe loop through rows pyspark. And for your example of three columns, we can create a list of dictionaries, and then iterate through .... For loops with pandas - When should I care? ... Difference between rows or columns of a pandas DataFrame object is found using the diff() method. ... Timestamp difference in Spark can be calculated by casting timestamp column to ... Lin colname- column name ### Get seconds from timestamp in pyspark from pyspark.. Nov 15, 2020 — As you may see,I want the nested loop to start from the NEXT row in respect ... the two dataframes as temp tables then join them through spark.. The OPENJSON rowset function converts JSON text into a set of rows and columns. ... My script is as below: from pyspark import SparkContext, SparkConf sc.stop() conf ... Pyspark iterate over dataframe column values *If you are struggling with ... knows how to iterate through pySpark dataframe columns. it should #be more .... In our example, filtering by rows which contain the substring “an” would be a good way to ... A JSON File can be read in spark/pyspark using a simple dataframe json ... Here we apply a 'for' or 'while' loop to go through the string and if condition .... May 27, 2020 — After that, you can just go through these steps: Download the Spark Binary ... toPandas() function converts a spark dataframe into a pandas Dataframe which is easier to show. ... from pyspark.sql import Rowdef rowwise_function(row): # convert row ... “Read-evaluate-print-loop” environment in data science.. mkString(",") which will contain value of Spark dataframe iterate rows scala. ... in order to iterate over how to loop through each row of dataFrame in pyspark.. Jun 29, 2021 — Looping through each row helps us to perform complex operations on the RDD or Dataframe. Creating ... from pyspark.sql import SparkSession.. Data Wrangling-Pyspark: Dataframe Row & Columns. where(df. ... API and a Spark DataFrame within a Spark application. pyspark filter rows with ... #want to apply to a column that knows how to iterate through pySpark dataframe columns.. Welcome to DWBIADDA's Pyspark scenarios tutorial and interview questions and answers, as part of this .... In Spark 2.x, schema can be directly inferred from dictionary. ... Code snippet Convert Python Dictionary List to PySpark DataFrame Feb 24, 2021 · Nested ... Export pandas to dictionary by combining multiple row values. ... How to iterate through a Nested dictionary? people = {1: {'Name': 'John', 'Age': '27', 'Sex': 'Male'}, 2: …

e6772680fe

Linguist 2.2

Pretty ones, 83 @iMGSRC.RU

Hampden, EPAC Cumberland Valley swim teams, CVAC 94-1 Cvr @iMGSRC.RU

на речке, 2 @iMGSRC.RU

Little Girls on the Beach and Pool 54, 083 @iMGSRC.RU

Happy Bhag Jayegi [2016 FLAC] M2Tv

Candid-feet, snap-00065 @iMGSRC.RU

Cute, sweet young preteen dancer Peyton .. check out her tight young body, peyton36 @iMGSRC.RU

For those who love middle school girls... Part 9!, ms9-4formal @iMGSRC.RU

Download file macOS Server 5.6.3 MAS [TNT].dmg (207,81 Mb) In free mode | Turbobit.net